At CES 2026 in Las Vegas, Nvidia once again reminded the technology world why it sits at the center of the artificial intelligence boom. Nvidia launches Vera Rubin, its next major AI computing platform, marking another step in Nvidia’s rapid pace of innovation.

The announcement comes at a time when demand for AI systems is exploding, driven by advanced chatbots, digital assistants, image and video generation, and increasingly complex decision-making software. Nvidia’s leadership believes Vera Rubin is designed precisely for this moment.

Nvidia shares edged slightly higher in premarket trading following the reveal, signaling cautious optimism from investors who are closely tracking how the company plans to stay ahead of rivals in the fast-moving AI hardware market.

What Is the Vera Rubin Platform?

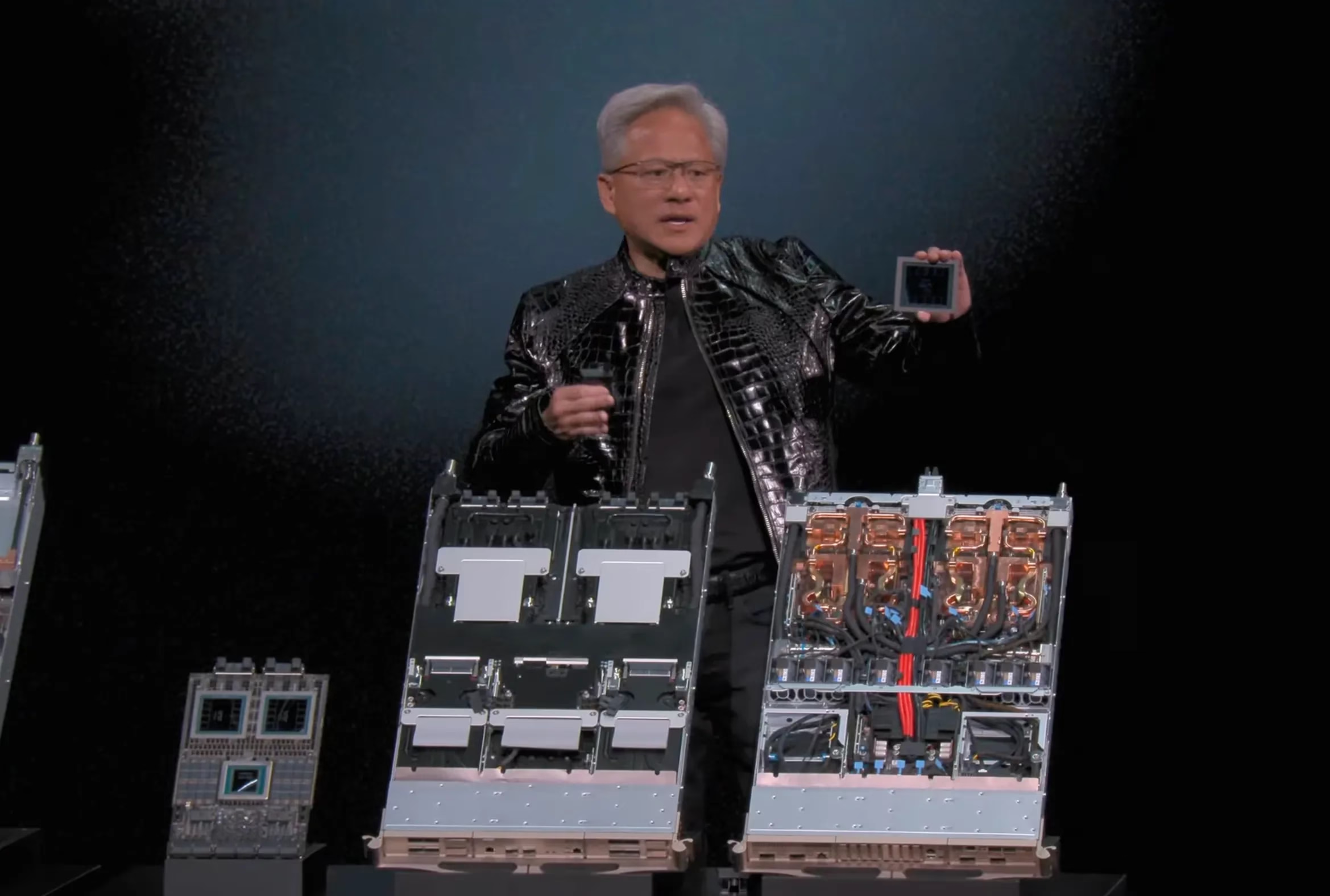

Vera Rubin is not just a single chip. It is part of a broader Rubin platform, which consists of six tightly integrated chips working together as one system. At the heart of this platform is a processor that combines one Vera central processor (CPU) with two Rubin graphics processors (GPUs).

In simple terms, Nvidia is blending computing brains and graphics engines into a single, more powerful unit. This approach allows the system to handle extremely large AI tasks faster and more efficiently than before.

The Rubin platform is built to support modern AI systems that do more than just answer questions. These systems can plan, reason through problems step by step, and decide which specialized tools or models to use for different tasks.

Built for the Next Generation of AI

Nvidia says the Rubin platform is especially suited for advanced AI systems that behave more like digital agents. These systems can analyze a request, break it into parts, and route each part to the most suitable model for an answer.

This kind of AI is becoming more common as companies move beyond basic chatbots and toward tools that can perform research, coding, customer support, and complex decision-making.

According to Nvidia CEO Jensen Huang, the timing of the launch is critical. AI demand is rising sharply not only for training new models, but also for running them at scale. Both activities require enormous computing power, and companies are searching for ways to lower costs while improving performance.

More Than Just Computing Power

Beyond the main processor, the Rubin platform includes additional chips that handle data movement, networking, and security. These components are essential because modern AI systems rely on massive amounts of data flowing quickly between machines.

Nvidia’s platform includes new networking and storage solutions that help AI systems communicate faster and store large volumes of information more efficiently. This matters because today’s AI models are growing to unprecedented sizes, often involving trillions of data points and complex reasoning steps.

Without fast and reliable data handling, even the most powerful processors can become bottlenecked.

From Individual Servers to AI Supercomputers

Nvidia is packaging the Rubin platform into a powerful server called Vera Rubin NVL72, which combines 72 graphics processors into a single system. When multiple NVL72 servers are connected, they form what Nvidia calls a DGX SuperPOD.

These SuperPODs are effectively massive AI supercomputers, and they are already in high demand. Technology giants such as Microsoft, Google, Amazon, and Meta are spending billions of dollars to build these systems in their data centers.

These companies use such infrastructure to train large AI models, run cloud services, and support consumer-facing applications used by millions of people daily.

Lower Costs, Higher Efficiency

One of Nvidia’s strongest claims about the Rubin platform is efficiency. The company says Rubin can dramatically reduce the number of graphics processors required to train certain advanced AI models compared with its previous generation, known as Grace Blackwell.

Using fewer processors to do the same work means companies can save on electricity, cooling, and hardware costs. It also allows spare computing capacity to be redirected to other tasks, improving overall productivity.

Nvidia also says Rubin significantly lowers the cost of running AI models once they are deployed. This is especially important because many AI systems are expensive not only to build, but also to operate day after day.

Why Token Costs Matter

To understand this improvement, it helps to think about how AI systems work. AI models break information—such as text, images, or videos—into small pieces before processing it. Each of these pieces requires computing power and energy.

As AI systems become more advanced, the number of these pieces grows rapidly. This makes AI increasingly costly to operate, especially for companies serving millions of users.

Nvidia says the Rubin platform sharply reduces these operating costs, making AI services more affordable over the long term. This could be a key advantage as businesses look to expand AI usage without seeing their expenses spiral out of control.

A Strategic Shift, Not Just a Product Launch

The Vera Rubin launch is more than a technical upgrade. It reflects Nvidia’s broader strategy of delivering major improvements on a predictable, annual schedule. This gives customers confidence that investing in Nvidia’s ecosystem will continue to pay off over time.

By designing its processors, networking tools, and software together, Nvidia is aiming to offer a complete solution rather than standalone components. This approach makes it harder for competitors to match Nvidia’s performance without building a similarly integrated system.

Analysis: What Vera Rubin Means for Nvidia (NVDA) and Investors

From a financial perspective, the Vera Rubin platform strengthens Nvidia’s position as the backbone of the global AI industry. Demand for AI computing shows no signs of slowing, and large technology companies are locked in a race to secure the most powerful hardware available.

Efficiency improvements are especially important. As AI adoption spreads, companies are becoming more cost-conscious. Nvidia’s promise of lower operating costs could make Rubin systems more attractive even at premium prices.

For Nvidia, this means continued pricing power, strong demand visibility, and deeper relationships with its largest customers. Hyperscalers that invest heavily in Rubin-based systems are likely to stay within Nvidia’s ecosystem for years.

However, expectations are also rising. Investors will closely watch whether Nvidia can deliver these efficiency gains at scale and maintain supply amid intense demand. Competition from rivals is increasing, but Nvidia’s integrated approach gives it a meaningful edge for now.

In summary, Vera Rubin is not just another chip—it is a signal that Nvidia intends to lead the AI revolution well into the next decade. For long-term investors, the launch reinforces Nvidia’s role as a central player in one of the most important technological shifts of our time.

Read also:

XRP Price Surge 2026: ETF Inflows, Supply Tightens, Outlook Ahead

Broadcom (AVGO) Beat Nvidia in 2025: Buy, Sell, or Hold in 2026?

Exxon Mobil Stock (XOM) Surges After Trump Hints at Venezuela Oil Control

Global Markets React Cautiously After US Captures Venezuela’s President